This is part 2 of the Amazon series, breaking down the AWS business. AWS has long been perceived as the gem within Amazon - so let’s see if it fits the bill. This is my 4th deep dive, following the ones on:

Richemont

Mastercard

Amazon Ecommerce

The point is to understand the essence of these businesses and what makes them special. This deep dive will go through topics such as:

History

Market breakdown

Business Model

Competitive advantages and competition

Runway

Capital Cycle

AI unit economics

End Demand

How AWS is placed

Financials

My view

I will also finish with a summary of the overall investment thesis and look at the combined financials and valuation. If you somehow reach the end, then I hope it answers these questions:

Why is AWS a special business

Why is cloud infrastructure an attractive industry

Why is this industry a three player oligopoly

Why vast investments in AI can work

Why AWS can win in AI

History

The AWS story is nothing short of remarkable. How does an Ecommerce company come up with the idea of developing the infrastructure behind the world’s computing. It really should have come from a company like IBM or Oracle.

But Amazon did it. How?

The rumour of how it started was Amazon wanted to sell its unused capacity during off-seasons to other businesses.

This was not the case. Rather it came down to the internal challenges they found in operating a proprietary technology stack and infrastructure services for Ecommerce. The company experienced the pains increasing capacity, launching new projects and innovating which was way too cumbersome.

AWS CEO Matt Garman explains:

Number one is that AWS was never about excess capacity of Amazon. Just like math doesn’t work. You can imagine that I’ve heard that narrative, it sounds nice. And as soon as Christmas time comes around, if I have to take Netflix’s servers away so that we can support retail traffic, that doesn’t really work as a business. So that was never the idea, intent, or goal of AWS.

At the same time, Amazon knew it was very good at managing and operating IT infrastructure at scale. So in 2002, the idea was floated around to outsource these capabilities to external parties. The decision was made pretty quickly and would take 3 years of planning and development before launch. AWS was formed as a startup internally under the leadership of now group CEO Andy Jassy.

AWS would remove the need to purchase expensive equipment from hardware providers like HP, IBM, Oracle, Microsoft and VMware. While companies could also free up staff from managing large amounts of data and on-premise facilities.

In 2006, AWS launched its first service, the Simple Storage Service (S3) that enabled data storage on the internet. From its 2006 press release:

Amazon S3 is based on the idea that quality Internet-based storage should be taken for granted," said Andy Jassy, vice president of Amazon Web Services. "It helps free developers from worrying about where they are going to store data, whether it will be safe and secure, if it will be available when they need it, the costs associated with server maintenance, or whether they have enough storage available. Amazon S3 enables developers to focus on innovating with data, rather than figuring out how to store it.

5 months later, Amazon Elastic Compute Cloud (Amazon EC2) was released providing on-demand compute - the resources needed to process data and run applications. It basically enabled customers to scale applications up and down flexibly and quickly. Storage and compute remain two key revenue sources for AWS today. CEO Jassy notes:

All apps need a compute solution, almost all need storage and most need a database. Most developers need some combination of the three, so we were strong about the platform approach right away.

The cloud reduced significant barriers to entry and saw considerable early success with thousands of startups and developers signing up. If we think about it, the cloud democratised development much like other industries:

TSMC developed capital intensive fabrication plants which removed the need for chip designers to produce chips themselves in-house

Shopify started front-end commerce and fulfilment services enabling sellers to scale brands effectively with custom built products

The company expanded into new services including databases, content delivery and security. While the growth was phenomenal. AWS even powered through the financial crisis and actually benefited as more organisations sought to reduce capital costs and variable expenses.

Microsoft Azure first announced its market entry in October 2008 and was generally available by 2010. While Google Cloud entered in 2008 and made available in 2011. AWS was essentially the only player in the market until 2010 - around 5 years.

During the 2010s, AWS continued to expand services, build data centres and bring on more functionality. The company cemented itself as the outright market leader.

Cloud Computing

Cloud computing enabled the delivery of IT services over the internet. The pay as you go model was great, startups did not need to invest significant upfront costs and could scale needs as they grew. Some notable startups include Workday, Spotify, and Monday.com who were all able to develop cloud-first business models and service millions of subscribers over time.

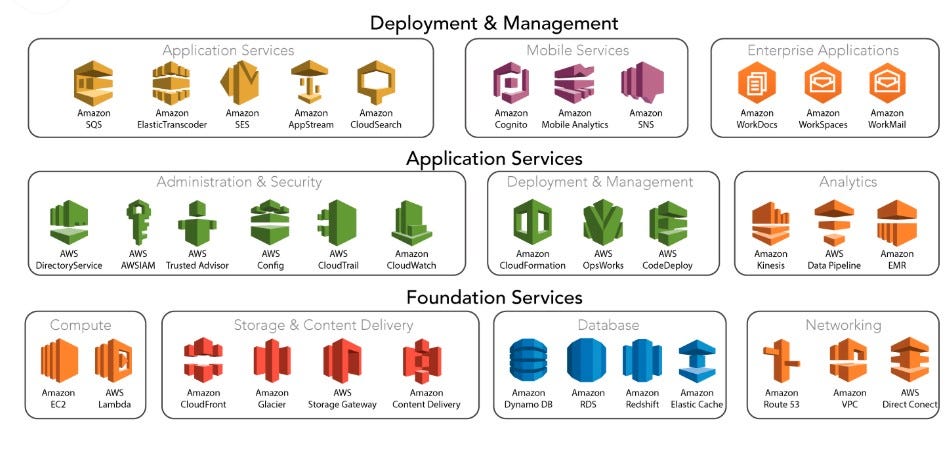

The segment AWS mainly operates in is cloud infrastructure, defined as:

‘Cloud infrastructure services’ means services that provide access to processing, storage, networking, and other raw computing resources (often referred to as infrastructure as a service, IaaS) as well as services that can be used to develop, test, run and manage applications in the cloud (often referred to as platform as a service, PaaS).

Basically, businesses outsource the costly and burdensome task of building and managing physical data centres to AWS. The company then rents this space to customers and develops best of breed services for customers to leverage. Some key services today include compute, storage, database and security.

AWS democratised technology and enabled smaller organisations to access best of breed products - effectively leveling the playing field. CEO Jassy noted in 2015:

AWS has pursued an extremely disruptive, lower margin model in a high-margin industry. It’s conceivable that there are those out there who still hope cloud computing doesn’t get traction.

The advantages of cloud computing can be seen below.

The consumption-based model means:

There are no upfront costs and maintenance costs

No staff needed to manage infrastructure

You pay for more resources when needed and cut those that are not

The cloud has advantages around cost, speed, flexibility, innovation, security and more. There are of course cons including some expensive use cases and reduced control and flexibility. Overall, the pros outweigh the cons and this has driven consistently higher adoption over time.

Customer offerings

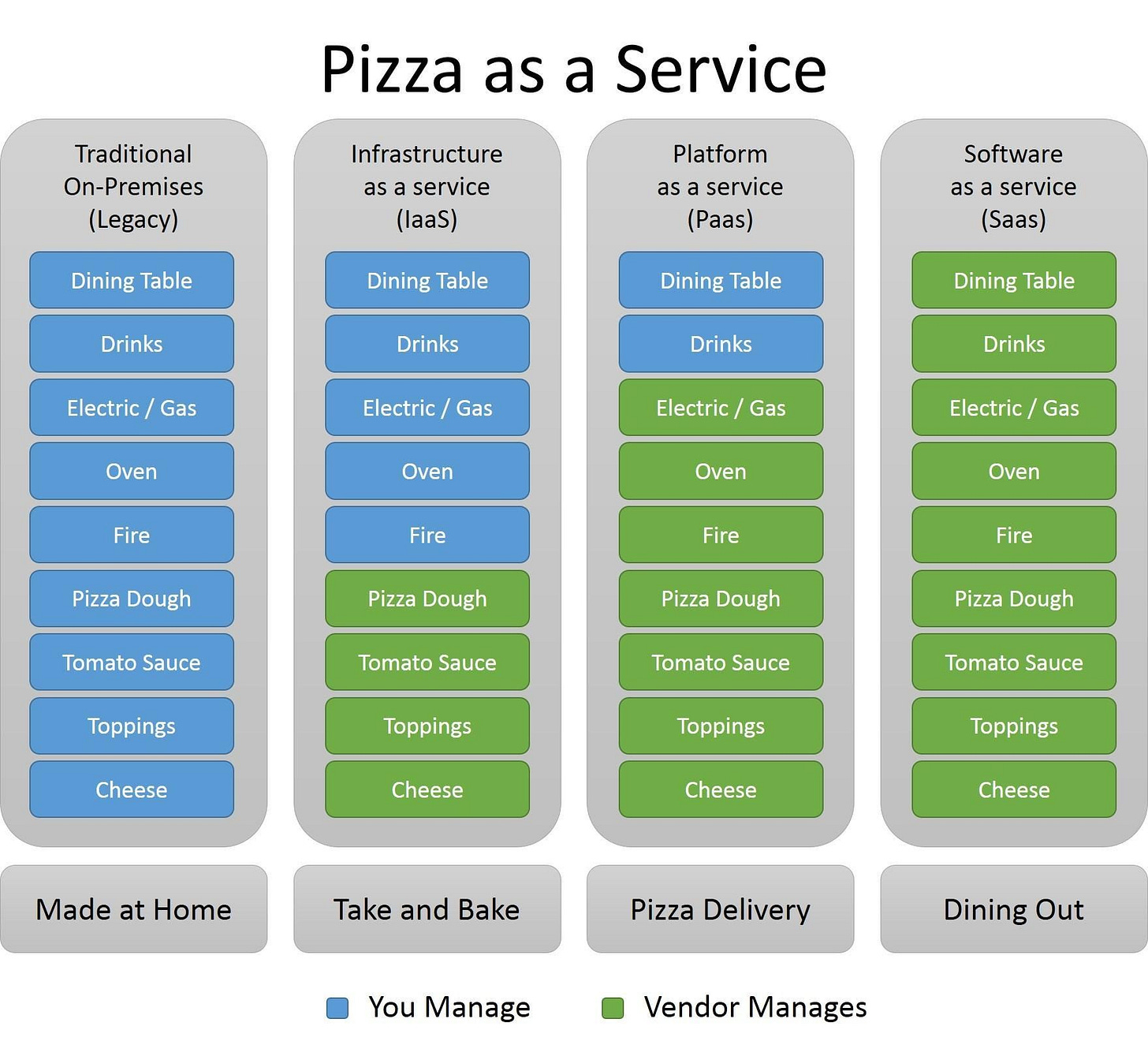

Having set the scene for cloud computing - I will go through the different types of cloud service offerings. The pizza as a service analogy explains the dynamic pretty well. See below.

There are basically four categories: Made at home -> Take and bake -> Pizza delivery -> Dining out. Each service layer removes complexity and adds value at a cost. From made at home, where you do everything to dining out where the pizza to the cutlery are all set out. Now lets think about this analogy for cloud models.

1. Infrastructure as a Service (IaaS)

The most basic tier. In this model, cloud providers provide the fundamental infrastructure such as servers, networking and storage. Customers manage everything else including the operating system, virtual machines, applications and data.

IaaS offers the highest level of control to the customer over the cloud environment, making it ideal for businesses that want flexibility and customisation. Notably, AWS began with an IaaS-first model.

2. Platform as a Service (PaaS)

Sits above the IaaS layer. In this model, cloud providers manage the underlying infrastructure as well as the platform operating system and runtime applications. Customers are only responsible for managing their applications and data.

The advantage for developers is they can focus on building, testing and deploying applications with no need to worry about managing the infrastructure. Cloud-native companies often adopt PaaS because they do not have legacy systems.

Platforms such as Snowflake and Databricks partner and integrate well with major cloud providers.

3. Software as a Service (SaaS)

This is the top layer of the cloud computing stack. The model delivers fully functional, ready to use applications over the internet. Customers get everything from the infrastructure to the application itself which is managed by the cloud provider including updates, bug fixes and security.

Customers simply pay to use the software, typically via a subscription model and are responsible for managing their own data within the application. This model allows organisations to avoid the complexity of building, hosting and maintaining their own software.

SaaS applications can be provided either directly by the cloud infrastructure provider (e.g., Microsoft with Office 365) or by independent software vendors (ISVs) who host their software on third-party cloud platforms. Microsoft Azure leads in SaaS through its productivity and enterprise tools such as Office 365 and Dynamics. Other major players include SAP, Oracle, Workday, Monday.com and Salesforce. You are basically going to a restaurant and getting everything served to you.

To put this into context now, AWS has taken a IaaS first approach while Microsoft Azure has gone from SaaS down. Both have their own advantages which I will explore in more depth later.

Market Breakdown

According to Gartner, the public cloud services market which includes all ecosystem players is worth around $596b and has grown at 20% annually over the past 5 years. SaaS is the largest segment and includes a range of leading cloud native companies ie Salesforce. The fastest growing segments are IaaS and PaaS, both growing above 20%.

The cloud infrastructure market is where the top cloud providers compete and mainly consists of IaaS and PaaS.

Market growth has been above 20%.

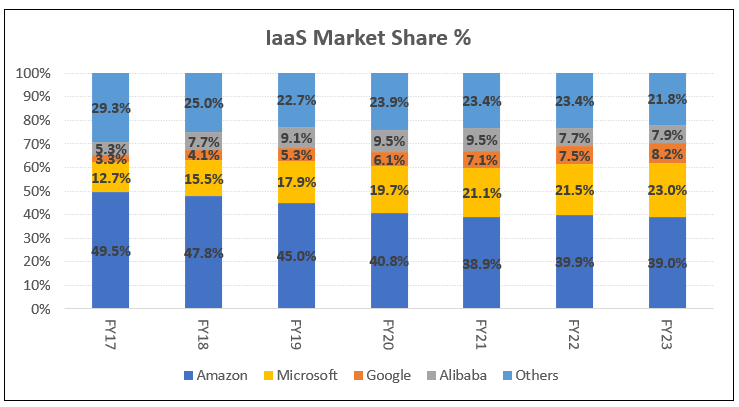

Market share among the top players is shown below. AWS is the outright leader with low 30s share and has gradually lost share. The clear number two is Microsoft with around 25% share. Google and in recent times Oracle have increased share. The top three cloud providers own around 70% of the market, with this number growing at the expense of small to mid-sized players.

AWS is very strong in IaaS with around 39% market share.

AWS has taken the infrastructure first approach and partnered more on the platform and application side. Microsoft is taking a vertically integrated SaaS down approach and bringing its productivity suite and enterprise solution customers to the cloud. Google is the number three player trying to differentiate with data and AI. I will discuss competition in more depth later.

Overall, the market dynamics are solid. Cloud infrastructure is a large market growing above 20% and oligopolistic in nature with three scaled players. The runway is also significant.

Value Chain

The cloud services value chain is quite complex with multiple players - see below image from Generative Value.

The value chain can broadly be separated across:

Hardware ie semiconductors and data centres - represent the networking equipment and chip components within data centres. The majority of value has flowed to leading players such as TSMC and Nvidia who deliver the highest performing chips. Nvidia and TSMC represent 43% and 22% of semi profits and have EBIT margins of 65% and 45% respectively.

Cloud Service Providers - operate the data centres and power the application and enterprise layer. The top three hyperscalers make up 70% of the market. Most of the economics flow to the leaders with scale - AWS and Azure likely have operating margins of 30-40%.

SaaS applications providers - includes a range of large application players like Adobe and Salesforce who have operating margins above 30%. Data platforms like MongoDB and Snowflake have margins closer to 10%.

The point of this section is to understand the whole operating environment and who derives the most value. The leading semi players have the highest margins but a lot of this has come recently via AI chips.

Its pretty difficult to separate cloud revenues. What is more clear is that the cloud service providers have great economics and are well positioned.

Business Model

As explained earlier, cloud service providers replace the fixed, capital IT expenditure with variable, operating expenses. It opened up the market for a lot of start-ups who were able to scale businesses with just a credit card.

Traditional IT providers failed to recognise the attractiveness of the cloud model. They were disincentivised by the cannabalisation of existing high margin (80%+) hardware revenue and the significant upfront investments needed for a payback profile that spanned multiple years. While cloud revenue was small with low initial margins. The classic innovators dilemma.

What they failed to recognise were the benefits of scale and the recurring nature of revenue.

The business model involves large upfront spend, building hyperscaler data centres which are large in size - around 300k square feet (equivalent of around 4 full size football pitches) compared to mid-sized enterprise data centres at 5k square feet. The build requires significant amounts of space and power to support thousands of servers for cloud computing, big data analytics and storage.

Amazon (AWS) Data Center Location in Eastern Oregon.

For those building a global network of data centres, the upfront costs are significant and include:

Land and construction costs to develop energy, water and data centre infrastructure. This can be outsourced or completed themselves.

Network assets including server racks, IP transit, fibre and more.

Network equipment such as servers to run software ie central processing unit (CPU), general processing unit (GPU), solid state drive (SSD) and more. These enable compute, networking and storage.

The split between infrastructure and network equipment costs is believed to be 50/50.

In some instances, the data centre build is outsourced to third-party providers, think co-location data centre providers like Equinix or Digital Realty. Cloud providers lease this space and will only provide the network equipment - significantly reducing the upfront costs required. This works for specific use cases and has been adopted by up and coming AI niche players such as CoreWeave.

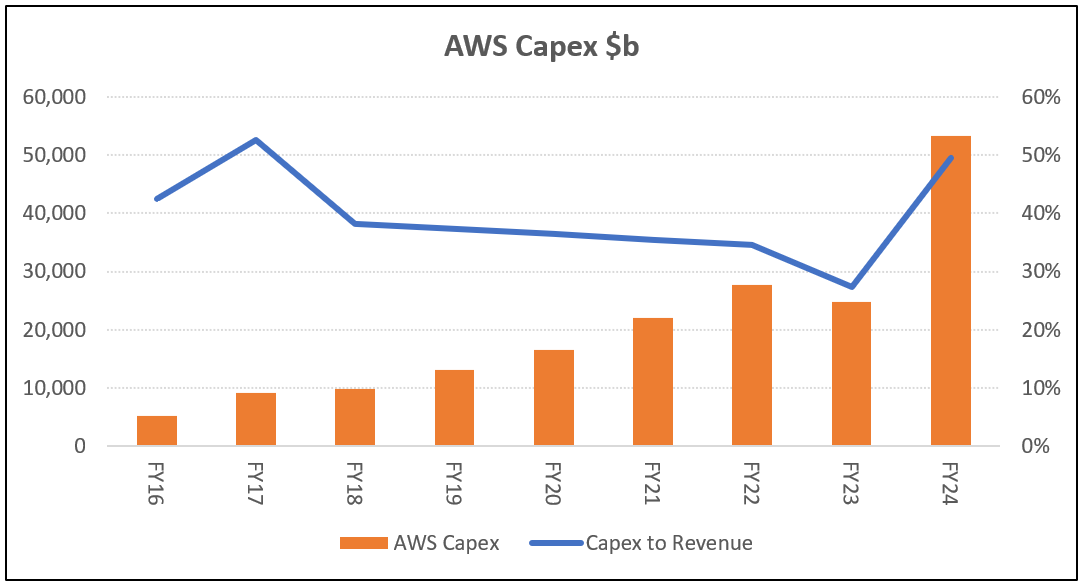

Cloud providers get forward demand indicators, although they generally build without clear customer commitments. Across both the initial cloud and AI build out stages, AWS capex to sales rose >50%.

The costs to operate data centres consists:

Energy and other utilities to run servers, cooling, ventilation and more. Electricity consumption prices have increased substantially in the last few years

Labour to operate data centres efficiently and securely

Leases charged from data centre developers who purchase the land and rent it out

Depreciation and Amortisation (D&A) associated with the useful life of networking equipment and infrastructure. The cloud service providers have increased the useful life of servers from 3 to 6 years.

The below shows co-location data centre operator Equinix and its split of operating costs. The company notes that costs are fairly predictable, fixed in nature and represent around 50% of revenue. This provides a barometer for hyperscalers.

Cloud providers can generate better economics by increasing utilisation over its fixed cost base. Although a fine balance needs to be struck between having extra capacity and being overutilised - the latter can cause outages and disruption.

To make things even tougher customers can scale usage up and down and revenue is recognised on a pay as you go basis. Although customer spend is generally quite recurring, sticky and tends to increase year on year.

Cloud providers thus need to aggregate demand forecasting and run tight operations to achieve the best outcomes.

The economics can work. AWS has operating margins around 40% levels.

To summarise, the business model is characterised by significant upfront capex and high fixed costs. To deliver attractive economics, providers need scale and operational rigour. Only a few players have achieved this.

Competitive Advantages

Cloud providers build and operate data centres and deliver services such as compute, storage and networking. Sounds pretty easy right? Well it’s actually not based on how consolidated the market is.

Scale is a large barrier across multiple aspects:

Capital intensity - requires billions of dollars to build and fit out data centres with no guaranteed payback. The scale of investment and upfront costs has deterred new entrants.

Supplier buying power - the leaders can negotiate favourable discounts with suppliers which reduces per unit costs. Smaller players do not have this luxury.

Significant R&D - spend significantly more to deliver an extensive and leading services portfolio. AWS has probably kept spending >20% revenue on R&D per year.

Higher utilisation - scale means more resources and fixed costs are utilised, bringing down costs per unit. For these reasons, larger data centres are generally more energy efficient.

Hyperscalers can pass on scale benefits and lower costs by either reducing prices or increasing innovation - both done consistently over the years. This increases the barriers to entry. Amazon noted in 2021:

Regular price cuts on all our services has been a standard way for AWS to pass on the economic efficiencies gained from our scale back to our customers. As of April this year, AWS has reduced prices 107 times since it was launched in 2006.

The alternative has been to reap the benefits through operating leverage which is reflected in AWS’ margin profile below. Microsoft has 41% operating margins in its Cloud and Intelligence Solutions segment and Google Cloud has 18% operating margins which is growing rapidly. Scale is less of a differentiator among the 3 market leaders.

Switching costs are high and another barrier. Moving workloads between cloud providers can be very painful. There is a significant advantage to being the primary and incumbent cloud provider, especially for larger organisations for the following reasons.

Shifting often requires a complete rewrite which is time consuming, labour intensive and disruptive. The move typically ends up being more challenging than expected.

Can be costly to run two services in unison and also bring in various third party integrators.

Staff need to be retrained due to technical barriers such as a different way to write code, unique interfaces, features and capabilities, authentication methods, development platforms and data migration.

Unforeseen errors often come up when switching.

Moving providers is a big technological decision and needs approval from multiple parties while entire processes need to be updated.

A public cloud user explains the predicament well:

All the infrastructure is totally and utterly different. The authentication methods are different. The audits, the logging, all the other bits around it, the performance, how you see the performance, how you do the monitoring. All of that is different. Essentially, if you want to be multi-cloud, you also have to be on-prem private cloud and public cloud compatible. So you need to be able to run virtual machines anywhere or containers anywhere…And that means you need two lots of expertise. People who've got experience in Cloud A and people who've got experience in cloud B.

Customers need a strong business case beyond just lower costs to switch. It has been described as the equivalent of moving house or moving a business across country.

A new player would have to provide something very unique to gain share. Or if the market provides some sort of opportunity.

On top of this, there are other competitive advantages around:

IP - leading cloud providers have 200+ services with deep functionality. These services can be quite specialised with AWS developing services in-house such as Supply Chain and Forecast that automates inventory forecasting for logistics companies.

Brand - highly regarded and trusted among the developer community. This reputation cannot be built overnight.

Counter-positioning - continue to disrupt the traditional data centre model with a flexible, capital light and lower cost alternative.

Network effects - more customers → more partnerships (third-party software and distributors) → more customers. Ie Salesforce is more willing to partner with a cloud provider that has a large customer base.

The combination of competitive advantages means it is very difficult for new and existing players to gain a foothold. Market share has grown among the top 3 players, and I expect this to continue.

With the surge in AI demand and the associated supply constraints, some other players have managed to grow share. I will explore this more below.

Competition

AWS and Azure are the outright market leaders while Google Cloud is seen as a capable challenger. Oracle and IBM are secondary players and have predominantly been used to support legacy workloads and systems. This is changing for Oracle with AI. There are smaller, up and coming AI native providers who are capitalising from the shortage of high performance chips. I will discuss the main players below.

The stats across the top 3 players are seen below.

AWS

AWS is widely regarded as the gold standard having been first to market and the outright leader. Its infrastructure-led approach has resonated with start-ups and cloud-native businesses who are not impeded by legacy systems. AWS’ advantages include:

Market Leadership & First-Mover Advantage - often already the incumbent cloud provider.

Security - has been a priority when designing data centres and software. There have been no major security breaches on its core infrastructure.

Services portfolio - offer 200+ services with broad selection and the deepest functionality. Enables it to meet the needs of virtually any customer.

Partner Ecosystem - extensive partner network across enterprise software (SAP, CrowdStrike), data platforms (Snowflake, Databricks) and global systems integrators (Accenture, Capgemini). Customers can access more partners to innovate, scale and support their cloud requirements.

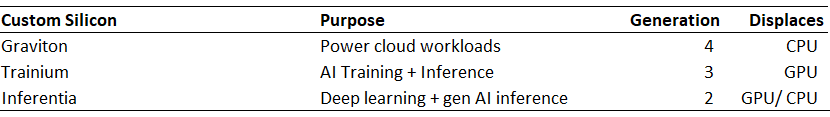

Custom Silicon - developed advanced custom silicon and network equipment with better price-performance than industry leading products. The initiative began in 2012, well before other peers while its acquisition of Annapurna Labs in 2015 prompted releases of Graviton, Trainium and Inferentia. Having custom chips specifically designed and integrated within their own data centres is a key lever to reduce costs and improve performance.

As the first to market with a consistent reinvestment approach, AWS has built leadership across services, partners, customers and custom silicon. Although competitors are investing and catching up. The company’s obsession to work backwards from customer needs means its constantly bringing new innovations to market.

AWS has disadvantages including its lack of vertical integration (application software), generative AI capabilities and the complexity of services and user interface.

Microsoft Azure

Microsoft Azure is the strong number two player, has a scaled global presence and sticky existing enterprise relationships. Azure has advantages around:

Vertical integration - have operated as a B2B company since 1975. Microsoft has decade long relationships with existing customers across products such as Microsoft 365, Windows OS, Servers, Dynamics and Power Platform. These products are embedded within customer operations and integrate seamlessly on Azure infrastructure which heavily influences the purchasing decision.

Bundling - Azure is frequently bundled with other Microsoft products in broader licensing deals. These packages often come with cross-product discounts, making it financially unattractive to adopt competing clouds - particularly since switching away from Microsoft products like Windows is operationally not feasible.

Better migrations - existing on-premise Microsoft customers experience easier migrations, sometimes a lift and shift.

Hybrid cloud - strong support for hybrid environments, allowing customers to transition to the cloud at their own pace as the company has solutions across both segments. Customers can leverage their on-premise server and database licensing in a cloud environment. This is especially valuable for regulated industries or legacy-heavy enterprises.

Developer Ecosystem Lock-in - many enterprises have built software in .NET and C#, Microsoft-native programming languages. The technical alignment reinforces Azure’s stickiness among development teams and IT departments.

High availability - the largest data centre footprint and high availability. Offers greater flexibility in terms of geographic coverage and data residency.

Azure has some structural advantages that make it very difficult to compete against. It has sticky enterprise relationships and highly embedded products that integrate more seamlessly in Azure. Bundling also increases the barriers.

Azure has a stronger proposition for enterprises and often wins against AWS despite having lower services functionality and scale. Azure has been the biggest winner of late. Its a great business and one to cover in depth in the future.

The disadvantages include past security breaches while its outsourcing of generative AI models to Open AI is both a risk and an opportunity (the relationship between the two is turning sour). The licencing practises are also questionable and sometimes predatory.

Google Cloud Platform (GCP)

The number three player is GCP, the main alternative and disruptor to the top two. GCP has fewer customers and market share and is often competing as the second provider. To grow share it has focused on enabling multi-cloud environments and ensuring workloads can readily be switched.

GCP customers tend to be tech-driven businesses such as start-ups, e-commerce, fintech or adtech companies. The group has advantages around:

Leading AI capabilities - developed advanced and in-house for more than a decade which compare favourably with leading AI models.

Data analytics - offer industry leading analytics via data platforms (BigQuery) and strong integrations with Kubernetes and Databricks.

Vertical integration - applications such as Google Workspace and Google Marketing tools integrate well with GCP.

Initial pricing - significant discounts and lower pricing for new users. Some companies will even receive two years for free.

Custom silicon - Google’s Tensor Processing Units (TPUs), purpose built for AI is used to accelerate both training and inference. The company is up to the 5th generation now with TPUs integrated across internal and external AI platforms.

GCP’s disadvantages include lower services functionality, fewer customers, weaker enterprise proposition and a lack of scale. Although GCP has been gaining share from a lower base.

The competitive intensity is high among the top 3 players who all have strong capabilities. Azure is best positioned with structural advantages in the enterprise space. AWS has the advantage of being the incumbent with the deepest functionality and high switching costs. Google is strong in data and AI.

The above shows that Azure and GCP have been growing faster than AWS. This is due to a variety of reasons including its weaker full stack, hybrid cloud and AI solutions, the shift to more multi-cloud workloads and a higher base. In addition, the increased take-up of custom chips (lower revenue and costs) is a headwind for growth.

The question is whether AWS can bridge this gap over time. I think Azure and GCP continue to grow faster but there is room for AWS’ growth to re-accelerate. The company’s commentary suggests supply remains constrained. The order backlog also remains healthy. CEO Jassy noted:

So, I think we could be driving – we could be helping more customers and driving more revenue for the business if we had more capacity. We have a lot more Trainium2 instances and the next generation of NVIDIA's instances landing in the coming months. I expect that there are other parts of the supply chain that are a little bit jammed up as well motherboards and some other componentry.

But I do – and some of that is just because there is so much demand right now, but I do believe that the supply chain issues and the capacity issues will continue to get better as the year proceeds.

Oracle

Oracle is the number four player ex China and has experienced recent share gains. The company started providing cloud infrastructure in 2016 and has been a viable option for existing on-premise users to shift databases to the cloud.

The company is the largest traditional database provider with a significant share of enterprise data workloads already on its servers. This proprietary data is a valuable asset for enterprise AI applications.

The recent resurgence has come from Oracle’s ability to serve the growing AI demand from customers such as Open AI, Meta, xAI, Palantir and more. The involvement in StarGate, a multi-billion dollar project with Open AI, points to its credentials and potential further growth.

Its partnership with NVIDIA is delivering strong AI price-performance relative to major cloud peers. And this has led to strong demand from major AI labs and heavy investment in AI infrastructure.

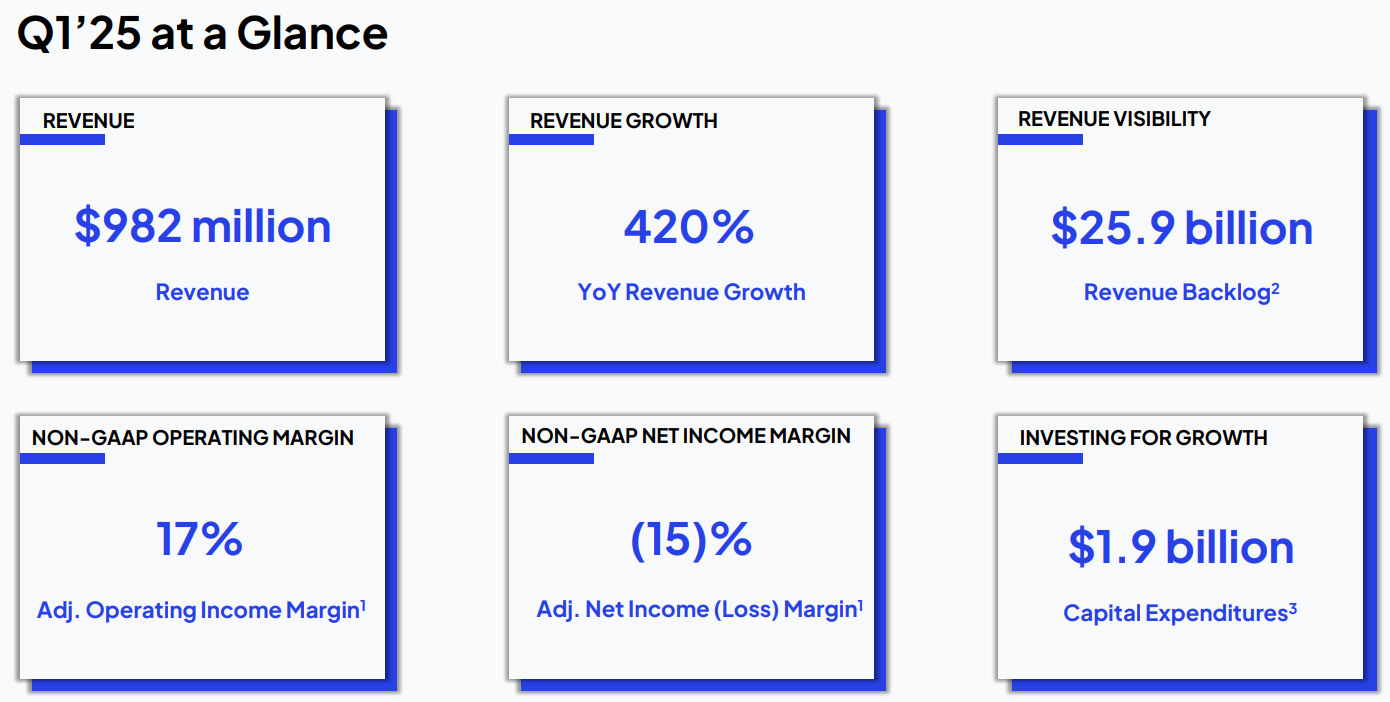

Oracle delivered 46% year-over-year growth in the most recent quarter. Its market share has expanded from 1% in FY21 to 3% in FY25.

CoreWeave

CoreWeave has rapidly established itself in the AI infrastructure market by leasing space in third-party data centres and securing scarce high-performance chips like NVIDIA’s H100. Amid a global shortage of AI compute, the company has met surging demand by delivering capacity to supply constrained customers, notably its largest customer is Microsoft. The company is gaining traction largely due to its ability to secure and deploy leading-edge GPUs quickly at scale.

CoreWeave’s revenue is expected to more than double to $5b in FY25 and double again to $11.5b in FY26. The growth is phenomenal. I will discuss the business and its economics in more detail below.

Competition Summary

In the fast-changing world of technology, competitive advantages can erode quickly. For example, what were once seen as leading capabilities in generative AI are quickly being replaced by the next big thing, this time agents and robotics.

The supply-demand imbalance in AI infrastructure has created opportunities for new entrants - CoreWeave and Oracle emerging as major beneficiaries as they are able to source leading chips and deliver purpose built data centres for AI.

In cloud infrastructure, the barriers remain high and the top 3 players continue to be the main providers. I think there is room for all three to grow given the market size and growth.

Despite share losses, I am comfortable with AWS’ position given its leadership, high switching costs and the focus on innovation and the customer:

AWS said that, due to the ‘speed at which things change’, ‘the guiding principle behind the development of AWS’ offer is iterative innovation.’ AWS said that this allows it ‘to continuously consider what AWS’ customers may want or need and drives it to constantly innovate and develop new products and services.

and

“Don’t lose sleep fearing your competitors,” Jassy recalls Bezos saying. “If you’re going to lose sleep, lose it over fearing whether or not you’re delivering the right experience for your customers.” From that simple declaration, the seeds of AWS were already being sown — a technology that would one day call the global technology marketplace to account, a simple byproduct of one company’s mission to deliver great experiences.

Runway

The cloud opportunity remains significant. In 2023, Accenture estimated adoption was around 20% and explains:

Instead of being 20% in the cloud and 80% on-premise, those numbers will be reversed. Think of companies moving to 80% in the cloud and doing so not in a decade but in just a few years. That is both the challenge and opportunity ahead.

AWS CEO Jassy thinks penetration is closer to 15%.

It's useful to remember that more than 85% of the global IT spend is still on premises, so not in the cloud yet. It seems pretty straightforward to me that this equation will flip in the next 10 years to 20 years. Before this generation of AI, we thought AWS had the chance to ultimately be a multi-hundred billion dollar revenue run rate business. We now think it could be even larger.

Whatever the actual number, it is evident that there is a massive opportunity ahead. If we assume 20% penetration of cloud infrastructure services, this translates into a TAM of $1.6t. So a big opportunity for cloud providers to continue migrating businesses to the cloud.

As workloads are brought across, customers invariably spend more on services. There is also the added component of generative AI, which was previously non-existent. Some estimates for the AI market:

IDC: AI will generate a cumulative global economic impact of $20 trillion, or 3.5% of global GDP, by 2030

McKinsey: generative AI could add $2.6 trillion to $4.4 trillion annually across the 63 use cases.

Bloomberg Intelligence: “the market for AI inference/fine-tuning, AI workload monitoring, and training infrastructure, including AI servers, AI storage, training compute, cloud workloads, and networking, will increase by over $300 billion from 2023 to 2028, growing at a CAGR of 38% from approximately $79 billion in 2023 to approximately $399 billion by 2028. This market opportunity is expected to include $330 billion related to training infrastructure, which includes AI servers, AI storage, training compute, LLM licensing revenue, cloud workloads, and networking; $49 billion related to inference infrastructure; and $20 billion related to workload monitoring, all of which are supportable by CoreWeave.”

Bloomberg suggests the AI infrastructure market in 2028 will surpass the $321b cloud infrastructure market today. It is anyone’s guess what AI can become but these numbers are extraordinary if you think about the market being non existent in 2022.

To realise the benefits of generative AI, businesses need to have workloads on the cloud. This should accelerate the migration of on-premise infrastructure to the cloud. Few companies will choose to own on-premise data centres.

So hyperscalers and AI players are rapidly deploying capital to capture the opportunity. AWS today has around $110b of revenue. It could be multiples of this in a decade’s time.

Capital Cycle

As an investor, it is important to be wary of capital cycles. In this case, billions of dollars of capital expenditure are being deployed with no guaranteed future returns. Cloud players continue to receive strong demand signals while supply is constrained. AWS CEO Jassy notes:

We don't procure it unless we see significant signals of demand. And so when AWS is expanding its capex, particularly in what we think is one of these once-in-a-lifetime type of business opportunities like AI represents, I think it's actually quite a good sign, medium to long term, for the AWS business.

Satya Nadella (Microsoft)

“Demand continues to be higher than our available capacity.”

Anat Ashkenazi (Alphabet)

“And a lot of these investments, you think about servers, et cetera, is based on demand we're seeing from customers. So this will translate to revenue in the fairly short term.”

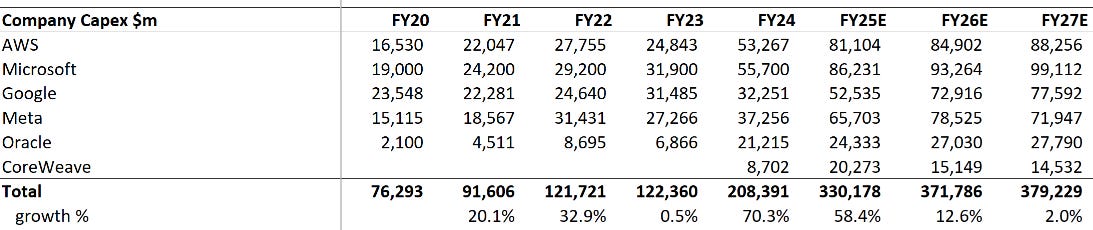

The size of capex growth is substantial, see below FY24 and FY25. This does not include the $500b extraordinary investment across 4 years under Project StarGate, which is looking to support the capacity needs for Open AI.

Consensus capex growth forecasts taper off from FY26. Who knows… it could still go higher. Especially as Nvidia releases more GPUs that are more expensive.

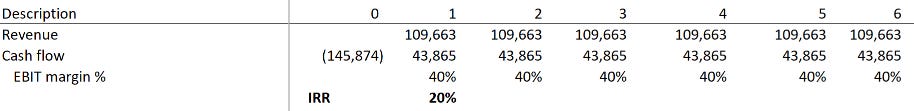

AI players expect a similar return on capital for these investments compared to cloud. My back of the envelope numbers assume:

20% IRR

70% of FY24 total capex is spent on AI investments

Translates to $44b in AI profits needed over 6 years

At 40% EBIT margin, this is $110b in AI revenue per year

And if we estimate AI revenues run rates today:

Azure: $13b annualised run rate

AWS: Multi-billions dollar annual run rate business growing at triple-digit %

Google Cloud: generating billions in annual revenue from AI infrastructure and Generative AI solutions

Meta: generative AI products at $2b-$3b in revenue in 2025

Oracle: probably does $2b

CoreWeave: expects $5b revenue in 2025

Some rough estimates get 13+8+8+3+2+5 = 39.. well short of $109b needed. CoreWeave has the most revenue over capex and is bringing supply online quickly. Revenue for the others should ramp up given the lead times.

At the same time, the cost of running these models known as inference is coming down. In the AI Index Report 2025:

AI becomes more efficient, affordable, and accessible. Driven by increasingly capable small models, the inference cost for a system performing at the level of GPT-3.5 dropped over 280-fold between November 2022 and October 2024.

and

Epoch AI estimates that, depending on the task, LLM inference costs have been falling anywhere from nine to 900 times per year.

Hardware and software are improving, custom silicon is advancing and competition continues to heat up - driving the cost of inference down. Chinese player DeepSeek has shown it can train and deploy models at a fraction of the cost. Not a lot of revenue actually comes from enterprises today, with falling inference prices the key factor in bringing in more customers.

AWS CEO Matt Garman notes:

AI inference, the ability to go do work — is going to continue to get more capable over time, and I think that there is a long road of this to get much, much, much more capable over time. And it’s going to get much less expensive to run over time, which I think then explodes the number of ways in which people will make it useful. Whether it’s running agents, doing other workflows, or performing long-running reasoning tasks, I think there’s a whole host of things that you can imagine. And so, there’s just a continuum of where the things eventually land and where you’re able to ask the computers to do more for you at lower costs.

AWS recently cuts costs for H100, H200, and A100 instances by up to 45%. The company notes:

As Amazon Web Services (AWS) grows, we work hard to lower our costs so that we can pass those savings back to our customers. Regular price reductions on AWS services have been a standard way for AWS to pass on the economic efficiencies gained from our scale back to our customers.

As AI models get more efficient, it raises questions on the level of capex spend. The risk is in over building capacity. I do not know the answer here.

Rather, I am comfortable with AWS’ spend given its strong balance sheet, profitable growth and the ability to slow capex if the demand equation changes. Its level of capex as a percentage of revenue is comparable to the early days of the cloud build and is a necessary upfront investment to build out capabilities. There are also demand signals and a clear demand-supply imbalance.

AI Unit Economics

A key question is what the unit economics look like for these AI investments.

The GPUs used to power AI are substantially more expensive and power intensive than CPUs. A general assumption is the return profile would be lower than cloud.

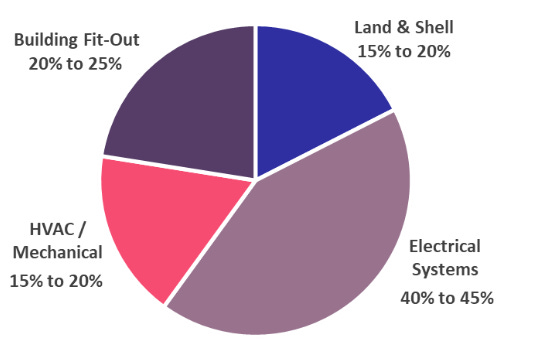

As discussed earlier, the general build and fit out of cloud data centres requires upfront investment into electrical systems, fit out, land and shell and cooling.

Development costs are estimated to range from $625-$1,135 per gross square foot, which translates into $264m at the midpoint for a 300k square foot hyperscaler facility. The total cost including networking equipment probably gets total costs to around $1b.

AI data centres cost multiples of this:

Meta AI data centre spans 4m square feet and is worth $10b

2 AWS data centres in Jackson Mississippi expected to cost $16b

Stargate project featuring OpenAI, Oracle and Softbank is building infrastructure worth $500b over 4 years. The primary focus is large-scale, gigawatt-level data centers in the U.S. with first year spend to be $100b.

The sites are bigger, consume more energy and are more expensive.

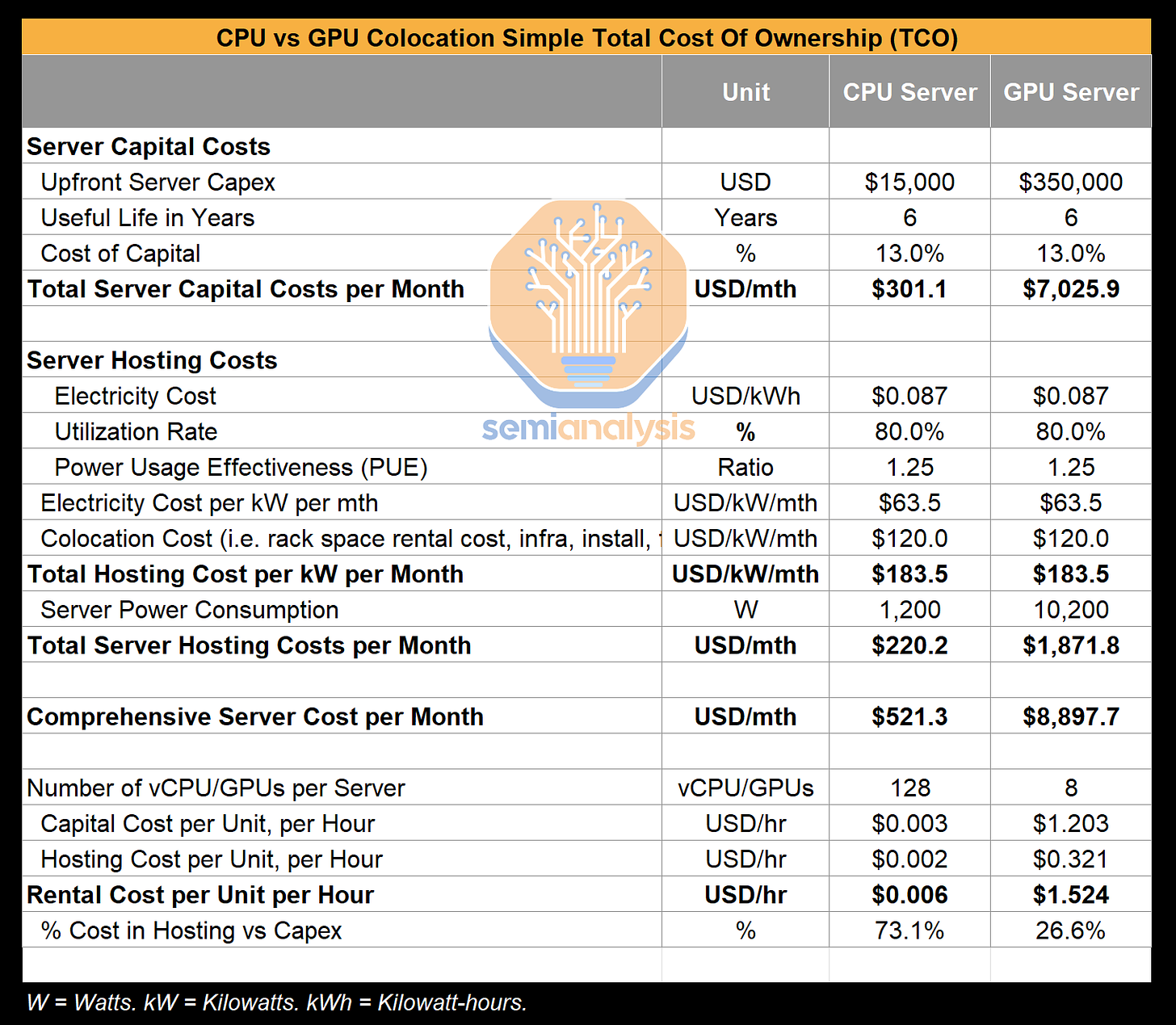

The two main components needed to deliver AI applications are GPUs and power. GPUs are preferred over CPUs due to their superior parallel processing capabilities and specialised architecture for handling large AI datasets. There are intense training and compute costs associated with them. SemiAnalysis provides an estimated total cost of ownership analysis below.

To put it more simply:

Upfront GPU server costs are 23x more than CPUs

GPU servers consume 9x more power

GPU server operating costs are 9x higher

Total GPU server costs are 17x higher

Rental cost per GPU is $1.5 per unit per hour vs $0.006 on CPU

Upfront capital represents 73% for GPU servers vs 27% for CPUs

New AI data centres have hundreds of thousands of GPUs. The GPU cost is thus significant which explains Nvidia’s explosive revenue growth and why cloud players are rapidly developing custom silicon to try and reduce these costs.

The costs for GPUs continue to rise with associated performance improvements. The latest Grace Blackwell 200 is estimated to cost around $60-70k which is triple that of the H100 and H200.

So can the cloud providers make it work?

It seems so. As described above, CoreWeave has found a niche as a key AI infrastructure provider for AI labs such as Mistral, OpenAI and Azure. The company’s recent IPO provides a barometer for what unit economics can look like. The business model is pretty good. For CoreWeave, contracts are signed in advance with initial prepayments to fund these purchases. This enables them to sustainably buy more GPUs and satisfy demand.

Our committed contract customers are AI labs and AI enterprises who require massive volumes of specialized compute at scale for high-intensity AI workloads. We typically do not submit purchase orders for systems without having a committed contract that matches the level of compute generated by such systems.

While the economics are also positive.

Using committed contracts that were in effect as of December 31, 2024 as a basis, we anticipate that our average cash payback period, including prepayments from customers, will be approximately 2.5 years. Our cash payback period is the time we anticipate it would take to break-even on our investment in GPUs and other property and equipment through adjusted EBITDA.

The calculation is performed on a per-GPU basis, starting with the estimated cash spent on property and equipment (calculated as change in total property and equipment excluding the change in construction in progress) during the quarter, divided by the number of GPUs that went into service in the period. This investment is then adjusted for any prepayments received from customers under committed contracts, resulting in the net cash outlay on a per-GPU basis. This net figure is then divided by the product of our adjusted EBITDA margin and the average annualized committed contract revenue per GPU for the period.

Assuming the useful life of servers is actually 6 years (could be lower given the massive technological advances) - it basically pays back the initial investment after 2.5 years and delivers additional profits and returns over the next 3.5 years. My basic per GPU assumptions can be seen below.

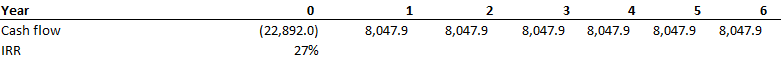

So it earns $8k per GPU per year and spends $23k as an initial investment. The math largely works out to the price of a H100 chip costs at around $24k. The IRR calculation per GPU is seen below.

I get to an IRR of 27%, which is pretty high. The company’s EBITDA margins are 64%. As to how clean this number is, there is the below adjustment. The expected useful life would also change the economics, if it is presumed lower than it implies a lower IRR.

The returns may not look as strong straight away for hyperscalers as they tend to build rather than lease, which means capacity takes longer to bring on. CoreWeave does not have these associated lead times.

For AWS, it is difficult to break down unit economics as AI capacity can be spread internally and externally. Although over time, AI returns are expected to match cloud returns. Group CEO Jassy notes:

Data centers, for instance, are useful assets for 20 to 30 years. And so, I think we've proven over time that we can drive enough operating income and free cash flow to make this a very successful return on invested capital business. And we expect the same thing will happen here with generative AI.

I think CoreWeave provides a barometer for profitability to work out over time. Current economics for cloud providers reflect the large upfront build out costs, where a portion is not immediately revenue generating and have useful lives of 20-30 years.

My estimates have AWS revenue re-accelerating slightly faster over the next few years.

End Demand

The majority of demand to date has come from AI labs such as OpenAI, Anthropic, Perplexity and a bunch more. For this to continue, these labs will need to monetise customers and deliver a sustainable, profitable model.

Developing leading AI models is capital intensive as they are trained on proprietary systems and datasets over multiple compute months which costs millions of dollars. Costs have continued to rise as AI labs drive technological advancements.

Despite the exponential revenue growth forecast, Open AI does not expect to be profitable until 2029 with revenue of $101b. Hard to fathom.

AI labs have benefited from the capital markets willingness to support heavy investments, driven by the promise of future payoffs. The below shows the estimated annualised revenue of the big 4 AI labs and funds raised. Open AI is at the top of the list with $64b raised, generating $9b in revenue and last valued at 33x revenue.

These players make money via two means; subscription revenue and developer API fees. Partnerships are also increasing ie OpenAI with Microsoft and Perplexity with Motorola.

As discussed above, the big barrier to broader adoption are inference costs which need to come down. BondCap notes:

The broader dynamic is clear: lower per-unit costs are fueling higher overall spend. As inference becomes cheaper, AI gets used more. And as AI gets used more, total infrastructure and compute demand rises – dragging costs up again. The result is a flywheel of growth that puts pressure on cloud providers, chipmakers, and enterprise IT budgets alike

Once this happens, AI can serve more use cases and be more attractive for businesses. The primary benefit to date has been in automating customer service and software development. The rise of AI agents seems to be the next frontier, where models can execute tasks such as booking meetings, submitting reports, logging into tools or orchestrating workflows across platforms.

Open AI growth has been strong with revenue growing from $3.7b in 2024 to $10b of annualised revenue now.

AWS CEO Matt Garman explains the return profile for AI labs:

I think the question is when does … The folks who are making the huge investments are the ones who are building foundational models from a software perspective and then reselling those foundational models. It’s a good question. I don’t know the answer to when that investment kind of fully pays off for an OpenAI or an Anthropic. I think Amazon and Google probably have a different math of when we can make those pay off because you get internal usage of them from your own use. I don’t know that. But there’s a lot of smart people investing in, continuing to put investment in a broad swath of AI companies. And you have to believe, which we do, that there is a massive economic benefit from many of these AI capabilities that are orders of magnitude bigger.

I do think it really plays into that math equation. As inference gets cheaper and more capable there are multiple orders of magnitude more inference to be done. And that is when it ultimately starts to pay off, I think, for a lot of those model providers, and in a huge, massive way.

There has been incredible consumer user adoption. I think this flows through to businesses over time. The major technology leaders all see generative AI as a transformational technology.

Its pretty clear Amazon is confident in the longer term potential across businesses with its note to internal staff:

Today, in virtually every corner of the company, we’re using Generative AI to make customers lives better and easier. What started as deep conviction that every customer experience would be reinvented using AI, and that altogether new experiences we’ve only dreamed of would become possible, is rapidly becoming reality. Technologies like Generative AI are rare; they come about once-in-a-lifetime, and completely change what’s possible for customers and businesses. So, we are investing quite expansively, and, the progress we are making is evident.

The risk is demand does not eventuate due to:

Low ROI for businesses

Commodisation of models with limited incremental improvements

Lack of viable use cases

Cost and complexity of implementation

These are areas to watch but there is momentum as AI continues to progress, lower inference is improving ROI for businesses and agentic AI is providing more practical use cases.

My expectation is for adoption to happen but it may invariably take longer as enterprises need to follow certain processes and manage infrastructure requirements first.

I think the cloud service providers present the best risk-return profile to get exposure to generative AI. They are major beneficiaries if generative AI becomes more widely adopted. And if it doesn’t then its wasted capex they can afford.

But I would not want to be bet against the technology leaders who continue to signal progress and the transformational impact it will bring.

How is AWS placed?

In November 2022, ChapGPT burst onto the scene and shifted the cloud leadership dynamics away from AWS to Azure via its Open AI partnership. Azure owned the rights to the leading large language model while Google had already invested for a decade in its own models. AI capabilities increasingly became a key purchasing decision for cloud customers.

AWS does not have an exclusive and leading AI model on its platform. While its recently released Nova models can handle high throughput, low value tasks - they are no where near as capable as the leading models. This remains a strategic disadvantage for AWS.

AWS is betting there will be multiple leading models that are used across specific niches ie Mistral for edge and hybrid, Anthropic for safety and clarity. The company has positioned itself via its Bedrock platform providing seamless access to multiple leading foundational models from Anthropic, Meta and more. The intentions are similar now across other players with Microsoft announcing its Azure AI Foundry platform in November 2024 while Google has offered its Vertex platform since 2021.

AWS’ main strategic intent is to bring cloud and compute costs down. Custom silicon is key here. The company’s Graviton chips are widely adopted for general purpose workloads. While Trainium has become core for its internal AI stacks. Trainium2 offers 30-40% better price-performance than standard GPU instances. Trainium3 is expected to be up to twice as fast and 40% more energy-efficient than Trainium2. It keeps getting better.

AWS has the advantage of having focused on custom silicon much earlier. The company is in its third generation for Trainium and Inferentia and fourth generation for Graviton. Uptake has been strong with AWS noting that 50% of new CPU capacity in the last two years has come from Graviton. This explains why revenue growth may not be as spectacular but margins have expanded. Amazon CEO Jassy notes on margins:

And we're also seeing the impact of advancing custom silicon like Graviton, it provides lower cost not only for us but also for our customers, a better price performance for them.

Industry leaders such as Snap, Twitter, Netflix, Epic Games, Splunk, and Nielsen have adopted Graviton processors.

Google has strong custom silicon capabilities with its TPU (Tensor Processing Unit) chips widely considered to be ahead for AI workloads. However, the company has only just released a general purpose CPU Axion that competes with Graviton. While Azure is well behind with its first custom AI chip announced at the end of 2023.

To stay ahead in custom silicon, AWS has formed a deep strategic partnership with Anthropic to not just be its primary cloud and training provider but a key partner. AWS is using Anthropic to collaborate on the design of new custom chips and be a proof-of-concept to effectively scale them across AI data centres.

Anthropic will use AWS’ Trainium and Inferentia chips to train its next generation models in Amazon’s new Ultracluster supercomputer called Project Rainier. The project should deploy 400k Trainium2 chips while the models are expected to be 5 times more powerful in computational power. By having Anthropic as a frontier lab on its cloud, AWS is able to better integrate Anthropic’s models into Bedrock, scale its custom chips and rapidly improve them.

AI is becoming a cost game and AWS’ leadership in custom chips remains a key competitive advantage here.

Technology is moving quickly and AWS could quickly catch up in the AI race. Its position is not as troublesome as first thought and the company is leading on custom silicon, which is what looks to matter most now. While early investments into its Bedrock platform were prudent and seems to be where things are headed.

What can AWS grow at?

AWS has grown at a 5-year 25% annual growth rate, pretty good but just below 28% market growth rate. AWS still has multiple levers for growth:

Increased consumption from existing customers and high switching costs

New customers and services

AI revenue

Reduced optimisation of workloads

I expect discounting and greater uptake of custom silicon to be headwinds to revenue.

Given the runway across cloud and AI, I think 20% industry growth rates are sustainable. AWS can grow just below market levels at high-teens - which is conservative in my opinion. Importantly, it is not a winner take all market and there is enough room for at least 3 players to win.

Financials

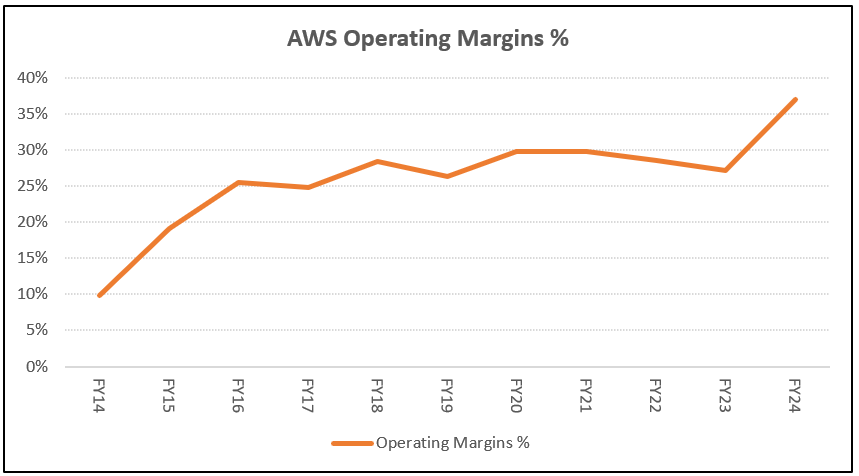

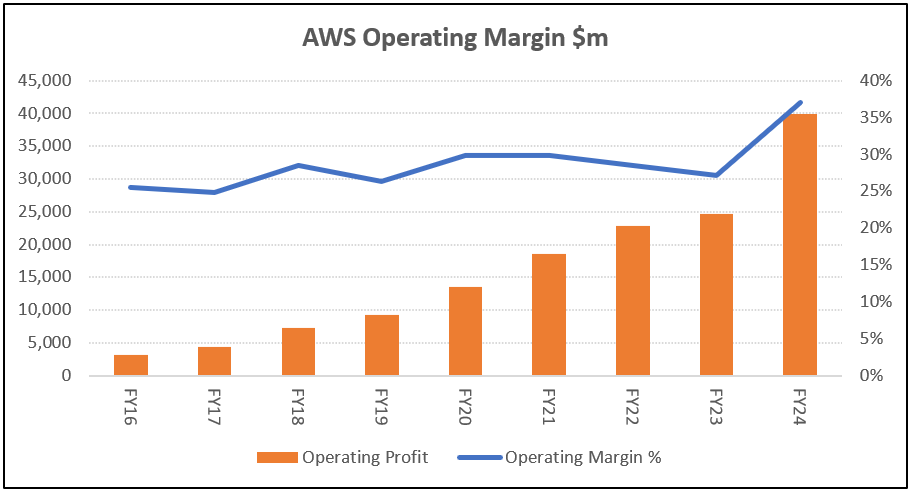

In FY24, AWS delivered revenue of $108b up 19% and operating profits of $40b up 62% with operating margins of 37%. Great numbers. Despite more modest revenue growth, operating margins have expanded.

Margin expansion has benefited from the company gradually increasing server useful lives from 3 years before 2020 to 6 years by 2024.

Headwinds are on the horizon. AWS capex has ramped significantly from FY24 and this is expected to continue. While the company has reverted some server useful lives back from 6 years to 5 years:

Changing the useful lives of a subset of our servers and networking equipment, effective January 1, 2025, from six years to five years. For those assets included in “Property and equipment, net” as of December 31, 2024, whose useful life will change from six years to five years, we anticipate a decrease in 2025 operating income of approximately $0.7 billion.

D&A should ramp higher in the outer years.

The company is balancing higher D&A by bringing down costs via low-cost custom silicon, optimising utilisation, maximising power usage and reducing headcount. This has seen margins actually continue to rise from 37.6% to 39.5% in 1Q25.

So far AWS has surprised to the upside on margins. Given the large capital investments into AI, the uncertain near-term returns and higher D&A, I think a cautious view on margins is prudent. My forecasts imply margins revert downwards and stabilise.

Risks

In the world of technology, things change quickly and what seemed like durable competitive advantages can dissipate quickly. AWS was once the king and is now catching up due to AI. The main risks for AWS going forward are:

Competition - highly competitive market where other players may win more deals due to the SaaS down approach, vertical integration, pricing or better capabilities. Customer purchasing decisions are increasingly shifting to AI. To win, AWS needs to innovate, delight customers and stay at the forefront of technology. Its focus on custom silicon and lowering compute costs are key.

Margin contraction - as discussed earlier the cost of building AI data centres is significantly higher than traditional cloud infrastructure. If the returns are substantially lower, this invariably reduces operating margins. CoreWeave provides a barometer for profitability while the upfront nature of investments should see revenue flow through over time.

Generative AI - the risk is AWS falls behind in generative AI. Other AI models from Open AI and GCP are not accessible on AWS. If these models continue to significantly outperform, then customers may choose to bring more workloads to competitor platforms. This remains a risk. Although Open AI has begun to use other cloud infrastructure providers and may down the line provide its models across other platforms to expand revenue.

Market slowdown - market growth of 20% is quite high and may slow over time, especially with the expected reduction in inference costs or if AI labs consolidate. I think the significant cloud and AI runway points to consistently strong growth.

Regulation - government intervention could lead to more scrutiny of data and AI practices and may limit progress. Anti-trust is also a risk but this seems higher for competitors than for AWS.

I also discussed the risks around inference costs and capex above. There are multiple risks to consider.

Valuation and Forecasts

I wanted to finish with valuation as a whole for Amazon. The key metrics are below:

My forecasts imply:

Revenue compounds at 10% from higher AWS and advertising growth offset by lower retail revenue growth

Operating costs ex D&A grows at 7-8% from mix-shift to higher margin segments and cost optimisation

Operating margins lift from 11% in FY24 to 16% in FY28

2% share dilution annually

EPS grows at 20% per annum

ROIC increases from 16.8% to 21%

The financials reflect a business shifting from a low margin retail business into a technology conglomerate generating profits from advertising, subscriptions, cloud and AI. Earnings should become less volatile due to the mix-shift and cost focus. There is also a long-term runway to continue expanding margins.

Given the significant growth capex, Amazon’s FCF to net income conversion has been low at around 25%. The capex ramp is expected to slow from FY26 and results in better conversion. See below FCF estimates taking out share-based payments and leases.

Using a 10% discount rate and FCF terminal growth rate of 5%, Amazon can deliver an IRR of 15%. Note I exclude share-based payments and leases from FCF.

Using a multiples approach, Amazon trades on a fwd P/E of 30x (based off my estimates) and can compound earnings at 20%. The earnings multiple compares favourably to its 10-year historical P/E of 78x. Note Amazon also does not adjust earnings and this is a pure statutory number.

I think a forward P/E of 30x is reasonable and if this multiple remains the same, then stock returns will reflect earnings growth - which is 15-20% over time. Quite compelling.

Investment Case

Amazon represents a compelling opportunity to buy in a market leader with exposure to two highly attractive industries:

Ecommerce - grows at high-single digits with runway to lift online penetration from 20% to 40%

Cloud - grows at 20% with 15-20% of IT infrastructure on-premise which is expected to flip

Amazon is becoming more than just an online retailer. Its earning more from Advertising, Subscriptions and Cloud which are higher margin and more recurring revenue streams. And becoming a better business with less volatile earnings.

In addition, the retail operations are past peak investment. Cost to serve in retail continues to fall with scale and efficiencies. Retail margins are around 2-3% and remain below traditional retailers - who operate inefficient store networks. There is a runway to grow margins above these levels with the benefits of generative AI only beginning to be realised.

Summary

In summary, Amazon is a high-quality, long-term compounder for the below reasons:

Leader in two attractive industries with long-term growth drivers

Superior product with strong share position

Consolidated markets with few capable peers

Long runway to reinvest capital at high returns

Strong management team focused on innovation

Solid balance sheet with limited debt

Diversified business model across revenue, customers and geography

Improving financials with high incremental ROIC and margins

There is a pretty high degree of confidence the business will be larger in 5 years time. It trades on a reasonable valuation. Looks good to me.